Interview with the ETSI Standards Organization That Created TETRA "Backdoor"

Brian Murgatroyd spoke with me about why his standards group weakened an encryption algorithm used to secure critical radio communications of police, military, critical infrastructure and others.

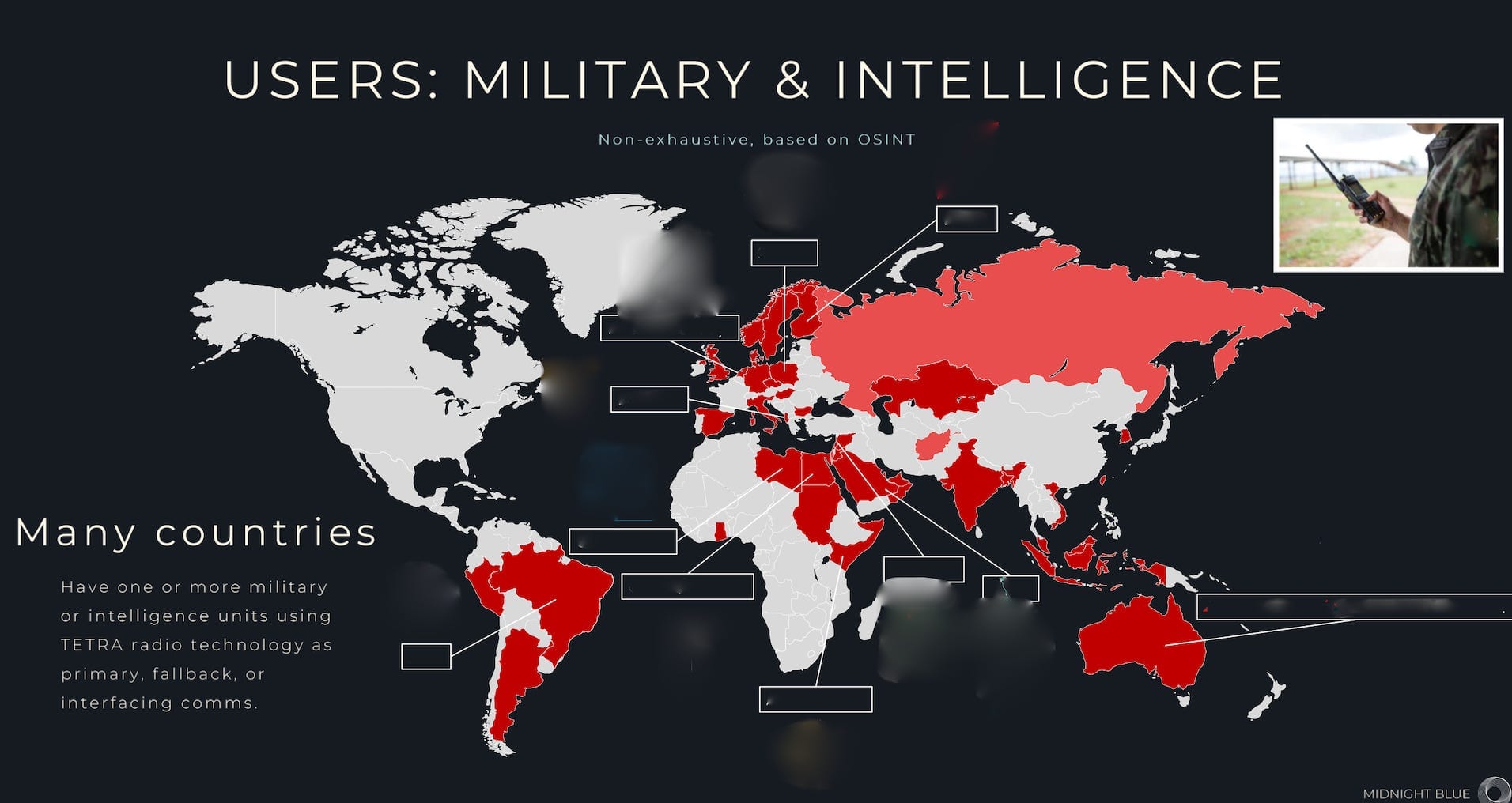

For 25 years police, military, intelligence agencies and critical infrastructure around the world have used a radio technology called TETRA for critical communications — a technology they assumed was secure. But a group of Dutch researchers recently got hold of secret algorithms used in TETRA and found it was anything but.

Most people have never heard of TETRA — which stands for Terrestrial Trunked Radio. The standard governs how radios and walkie-talkies used by the vast majority of police forces around the world, as well as many others, handle critical voice and data communications.

TETRA was developed in the 1990s by the European Telecommunications Standards Institute (ETSI) and is used in radios made by Motorola, Damm, Hytera and others. But the flaws in the standard remained unknown because the four encryption algorithms used in TETRA — known as TEA1, TEA2, TEA3, and TEA4 — were kept secret from the public. The standard itself is public, but the encryption algorithms are not. Only radio manufacturers and others who sign a strict NDA can see them.

The researchers — Carlo Meijer, Wouter Bokslag, and Jos Wetzels of the Dutch cybersecurity consultancy Midnight Blue — say that TETRA is one of the few remaining technologies in this area that still uses proprietary cryptography kept secret. Keeping the algorithms secret is bad for national security and public safety, they argue, because it prevents skilled researchers from examining the code and uncovering flaws so they can be fixed. Under the belief that keeping the algorithms secret keeps them secure — security through obscurity — actors intent on finding the vulnerabilities, such as nation-state intelligence agencies or well-resourced criminal groups, are free to exploit them unimpeded, while users remain unprotected.

The researchers were able to extract the algorithms from a Motorola radio and reverse-engineer them, which led them to discover an intentional backdoor coded into one of TETRA’s four secret encryption algorithms.

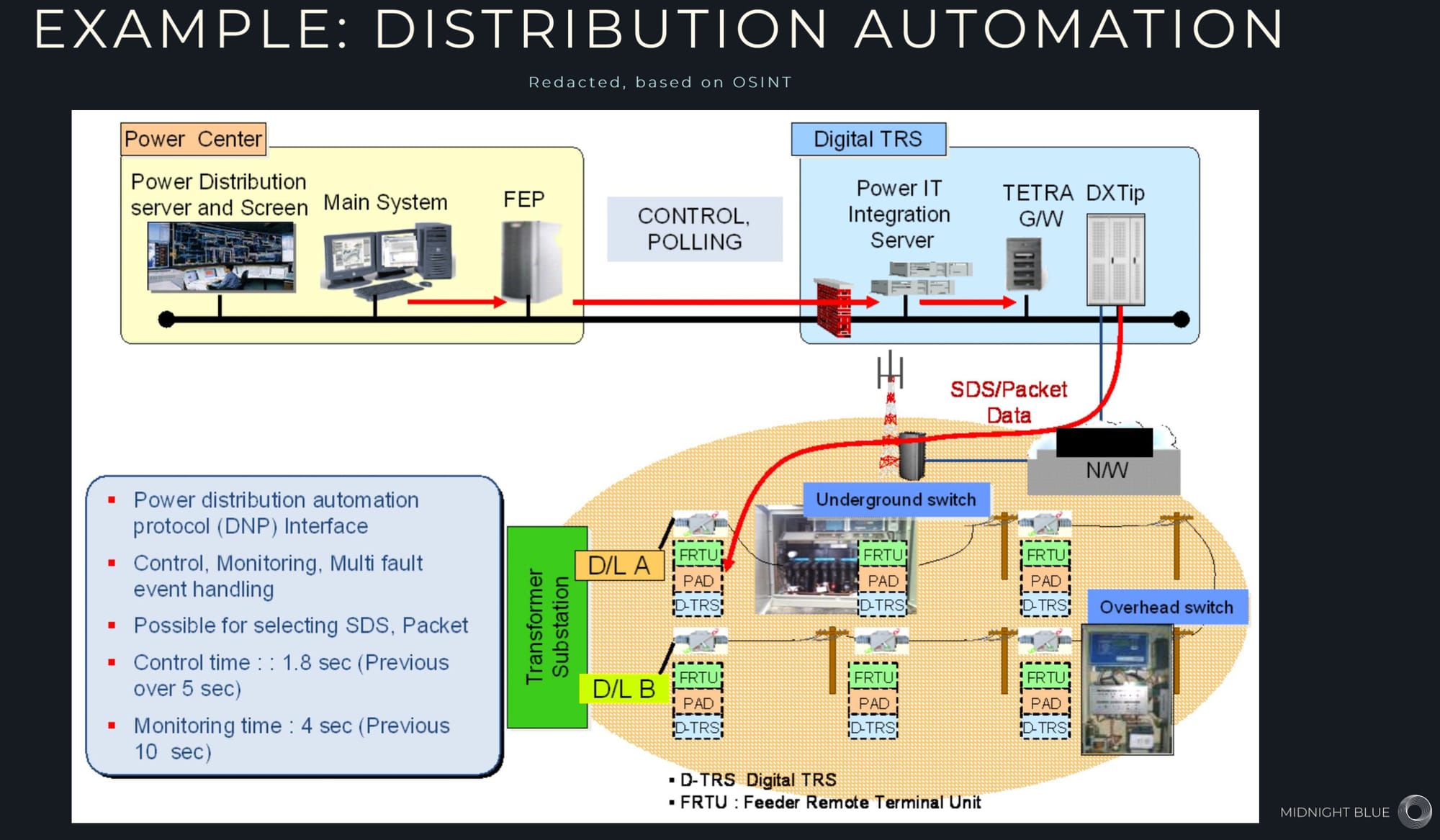

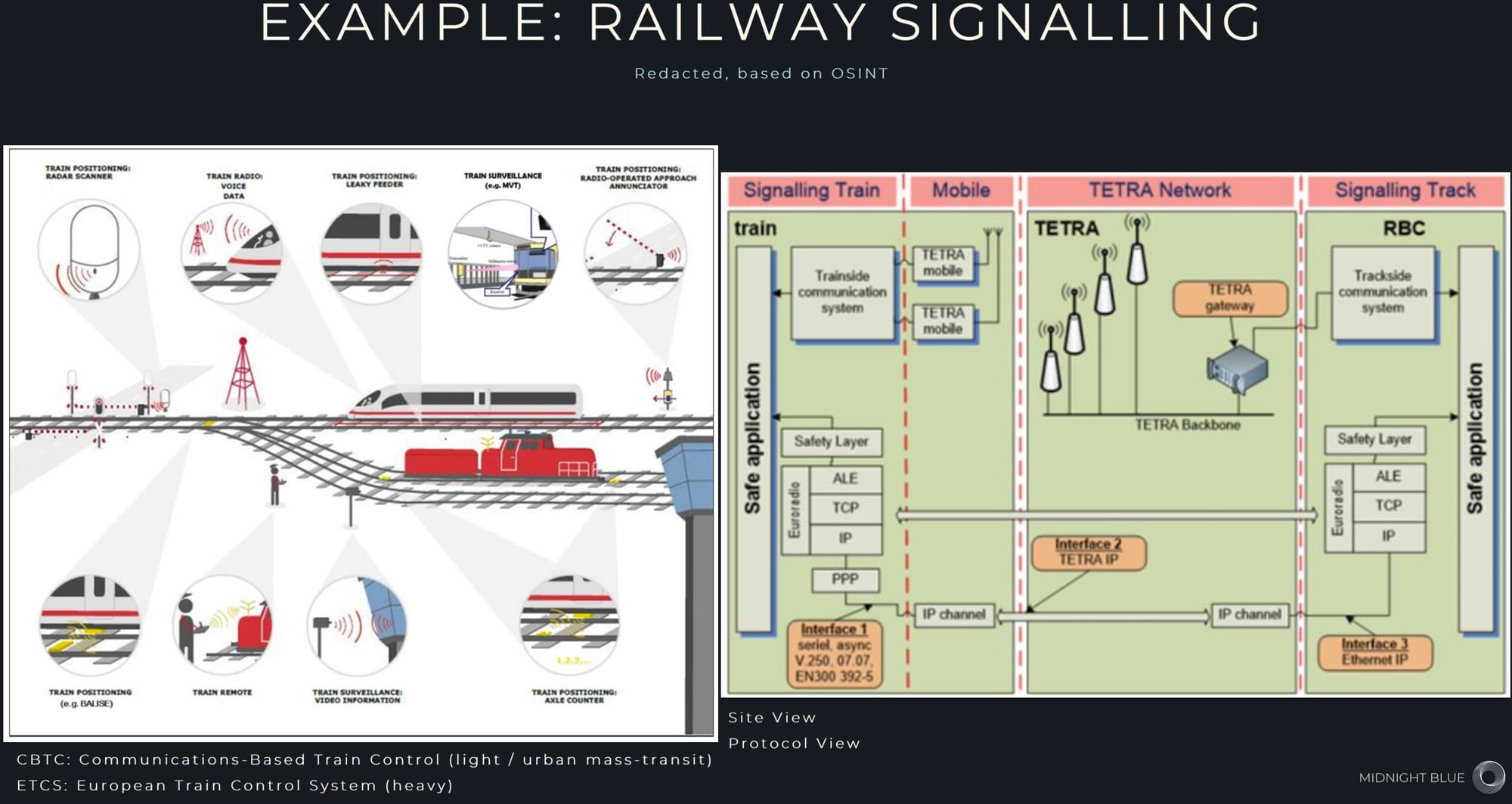

As I wrote in a story published by WIRED, the algorithm is used primarily by critical infrastructure to secure data and commands in pipelines, railways, and the electric grid, but it’s also used by some police agencies and military around the world. Publicly, the algorithm is advertised as using an 80-bit key, but the researches found it contained a secret feature that reduces it to a 32-bit key — allowing them to crack the key in less than a minute using a standard laptop.

The researchers are calling it a backdoor, because the reduction isn’t a glitch; the algorithm was designed this way to make it intentionally weak. ETSI says it’s not a backdoor and that the algorithm was weakened because this was the only way it could be exported and used outside of Europe. But as a result of the backdoor, malicious actors who crack the key, as the researchers did, would be able to snoop on police communications or intercept critical infrastructure communications to study how these systems work. And they could also potentially inject commands to the radios to trigger blackouts, halt gas pipeline flows, or re-route trains.

In addition to the backdoor, the researchers found another critical problem that wasn’t a weakness in the algorithms but a flaw in the underlying standard and protocol. It would allow malicious actors to not only decrypt critical radio communications of police, military and others, but also to distribute false messages to radios that could deceive or misdirect personnel in a crisis situation.

To address the issues, ETSI created three additional algorithms to replace the previous ones. They are called TEA5, TEA6, and TEA7. But these also are secret, which means no outside experts have examined them to determine if they are secure.

I spoke with Brian Murgatroyd, chair of the technical body at ETSI responsible for developing the TETRA standard and algorithms. We talked about who was behind the decision to keep the algorithms secret, why they weakened one of the cryptographic keys, and why the group plans to keep the new algorithms it created secret as well.

For context I’d recommend reading the WIRED story before reading this interview. And here’s a quick primer on the four TETRA encryption algorithms and who uses them:

TEA1 — is for commercial use and is primarily used by critical infrastructure around the world. But it’s also used by some police and military agencies outside of Europe.

TEA2 — considered a more secure algorithm is designed for use only in radios and walkie-talkies sold to police, military, intelligence agencies and emergency personnel in Europe.

TEA3 — essentially the export version of TEA2 which is for use outside of Europe by the same kinds of entities that use radios with TEA2.

TEA4 — also for commercial use but is hardly used, the researchers say.

KZ: Let’s talk about TEA1 and the backdoor the researchers found in it.

BM: The researchers found that they were able to decrypt messages from this, using a very high-powered graphics card in about a minute. It highlights the fact that over the course of a quarter of a century that we’ve had this algorithm, that now it’s maybe a bit vulnerable.

KZ: A bit?

BM: [Laughs] Yeah okay. I would say it’s vulnerable if you happen to be an expert and have some pretty reasonable equipment. [But] it’s not as if you can just plug in some kind of radio and then decrypt this without a fair degree of knowledge.

KZ: But there are people in the world who do have that skill — nation-state actors, intel agencies, maybe also insurgents in military environments and drug cartels in Mexico, who either have the knowledge and resources or can acquire them.

BM: TEA algorithms are only there to protect [communications] at a certain level. People who want high security have to use additional measures. For instance in the UK,… we use TEA2, which hasn’t had a problem. But on top of that, we’d always use end-to-end encryption as well … to give us a kind of double protection. And I would expect that anybody … who need a lot of protection would not just be using TEA1. Within Europe… I would suggest that anyone who needed high security would be using TEA2. Outside Europe… for those people needing that kind of level of security, use TEA3…. The problems generally are that TEA2 is only licensed for use within Europe by public safety authorities. And TEA3, for the same purpose outside Europe.

KZ: TEA1 is used for data communication in critical infrastructure — pipelines, railway signaling, airport environments, and the electric grid… these environments don’t historically have good security, nor do they have the funds and resources to apply end-to-end encryption. And if you’re not emergency services, you cannot switch to TEA2. So critical infrastructure wouldn’t be able to switch.

BM: But TEA3 would be available to states that are considered able to get TEA3. In other words, everything is under export control. TEA1, 2, and 3 were all designed for specific purposes and are really constrained by export-control regulations [such as the] Wassenaar Arrangement.

So a company exporting from the US, for instance, would obviously come under [US] export control regulations [and] it would depend really on their judgment as to what algorithm could be released. There have been cases of companies in western Europe trying to supply to customers abroad and being refused [permission to sell] TEA3… and so they had to go to TEA1…. TEA1 was designed in the early 90s to meet export-control requirements.

KZ: How did it go about meeting those requirements, because that’s the one they’re saying has a backdoor in it. Was that the condition for export?

BM: Backdoor can mean a couple of things I think. Something like you’d stop the random number generator being random, for instance. [But] what I think was revealed [by the researchers] was that TEA1 has reduced key-entropy. So is that a backdoor? I don't know. I’m not sure it’s what I would describe as a backdoor, nor would the TETRA community I think.

KZ: I spoke with cryptographers who said there’s no way you would allow an 80-bit key to get reduced to 30 bits if you didn’t have intention of it being breakable. And it’s advertised as an 80-bit key algorithm. People do not know there’s this reduction in it. So that would imply there’s a secret about the reduction. And because it’s a proprietary algorithm, no one has been able to examine this publicly without signing an NDA. This is the first time in a quarter of a century, that someone publicly has been able to access the algorithms to examine them.

BM: Absolutely correct. Yeah. The algorithms are private.

KZ: People … believe they're getting an 80-bit key and they’re not.

BM: Well it is an 80-bit long key. [But] if it had 80 bits of entropy, it wouldn’t be exportable.

KZ: So that was a condition — for it to be exportable — that it had to have that reduction in it.

BM: Yep. I think that’s an important point that we’d like to make. That, you know, given the choice, obviously, we would have preferred to have as strong a key as possible in all respects. But that just wasn’t possible because of the need for exportability.

KZ: What you’ve told me doesn’t really contradict what the researchers say, even though you don’t like the term “backdoor.” It was intentionally weakened — for it to be exported. That is essentially a backdoor.

BM: No comment. It was designed to meet export-control regulations. I think that’s as far as we can say.

KZ: Why not tell people the key is [only] 30 bits of entropy?

BM: Well, 32 bits. The time doubles [with] every bit you have, so it’s significant but not that significant. So, why not indeed? Because the algorithms were private, and therefore only available under NDA.

KZ: You can keep the algorithm private but still provide people with information about the key so they can make informed decisions about whether or not to use it based on how secure it might be. By withholding that information, it feels like a secret.

BM: At the end of the day, it’s down to the customer organization to ensure that things are secure enough for them. Now, I agree that’s difficult with a private algorithm. The manufacturer knows the length of the key, but it’s not publicly available. But the reason we have three different algorithms available must be clear to somebody that they’re not all as secure as each other.

[An ETSI spokeswoman interjects that the vendors knew about the reduction and would have been able to tell customers about mitigations they could take if using TEA1.]

KZ: So you have the vendor, let’s say Motorola, marketing these radios. The customer doesn’t know there are three different algorithms. Motorola is going to market to the customer only what that customer can purchase. So if you're critical infrastructure, you’re not going to be told there are these other two algorithms used in other products so they can make informed decisions about whether or not one is more secure than the other. The customer never gets that choice to go with other algorithms that are more secure. You're not told about more secure algorithms used in other products that you can't actually buy.

BM: Right. Okay. I agree…. Some user organizations will undertake risk analysis, and others won’t…. I suppose all I can say is that 25 years ago the length of this algorithm was probably sufficient to withstand brute-force attacks. But over all the years since then, clearly, computing has … [doubled] its strength every 18 months and now it is relatively easy [to compromise], as the researchers have shown.

KZ: You’re saying 25 years ago 32 bit would have been secure?

BM: I think so. I can only assume. Because the people who designed this algorithm didn’t confer with what was then EP-TETRA [ETSI Project-TETRA is the name of the working group that oversaw the development of the TETRA standard]. We were just given those algorithms. And the algorithms were designed with some assistance from some government authorities, let me put it that way.

KZ: Let’s walk through that process. So ETSI receives a request to produce algorithms? How does this work?

BM: In the case of TEA2, this was funded by some European police forces … to be kept separate from the other ETSI algorithms. [It’s] the same for TEA5, which is the equivalent of TEA2 in our [new] algorithms. So TEA2 is separately funded and is controlled separately from the other ETSI algorithms because it’s only to be used really in EU countries, plus one or two others, and is really tightly controlled. We allow the military to use it, for instance, as well as blue-light public safety [agencies].

KZ: Who funded TEA3?

BM: That was the same as TEA1. That was a normal ETSI algorithm and is controlled by ETSI in the same way as TEA1. But that is designed for public safety use in non-European countries where export control can be granted.

Back in the early 90s, it was before my time, ETSI had a special committee called SAGE — Security Algorithm Group of Experts — who were tasked to develop these algorithms for TETRA. They did that and then gave those algorithms to what was then ETSI’s Project-TETRA. That was the process. Now, exactly what their process was [after that] we’ll never know.

KZ: So ETSI Project-TETRA decides they need new algorithms and then SAGE consults with the government?

BM: They did at the time, because some of the members of SAGE at the time were members of government.

KZ: And then who developed the algorithms?

BM: SAGE did. They are all cryptographers.

KZ: So they decide they’re going to have tiers of algorithms, one is for commercial use that is exportable, which is TEA1. And it has to have this reduction key in order to meet what the government deems is acceptable for exportation. Is that correct?

BM: That’s what we now know yeah — that it did have a reduced key length.

KZ: What do you mean we now know? SAGE created this algorithm but the Project-TETRA people did not know it had a reduced key?

BM: That’s correct. Not before it was delivered. Once the software had been delivered to them under the confidential understanding, that’s the time at which they [would have known].

We’ve [now] mitigated all these things [the researchers found]. We didn’t actually use SAGE this time to develop the [new] algorithms. We formed a special group, a special committee of ETSI called TETRA Security Algorithms to develop the [new algorithms]….

The encryption algorithm is actually only one part of the TETRA security. We’ve got quite a strong authentication mechanism…. The base station tries to authenticate the terminal, and the terminal will then confirm by authenticating the base station, which prevents false base station attacks. The third part of TETRA security is the ability to disable terminals, either permanently or temporarily. Anyone … reporting a loss of radio … or if it’s stolen, they can permanently disable it, which removes even the long-term secret keys from the radio.

KZ: Do you think TEA1 is secure enough to be used for critical infrastructure?

BM: Hah. Well that’s a yes-no question to a complex subject isn’t it? I think … it depends who’s attacking it and whether high-value targets … are using TEA1. And the trouble is, the world has changed … in the last couple of years … where you could have attacks on things like pipelines and other things connected by telemetry. And if they’re using TEA1, well we know that certainly a government actor could have a go at them in a sort of short order. Because that’s exactly what the researchers have proved.

KZ: If TEA1 is used … you have the potential to inject false commands into the stream, so you could tell a breaker to open in order to cause a blackout. You could tell a valve on a pipeline to open or close… or if we’re talking about railway signaling system you could potentially instruct the track on a train to switch tracks …

BM: But what you’ve described are attacks on the infrastructure. What the researchers have found is that you may be able to decrypt messages on the system in fairly short order. That’s different from being able to [inject] false messages. Putting in a false message is much more tricky. You’ve got to actually have a false base station not on the network to be able to do that. And that’s what authentication is all about, the fact that you should only have legitimate base stations on a network, which is proved by mutual authentication. So I’m not sure how you can get from looking at the signaling on telemetry or a railway system … to actually being able to attack it. [In the WIRED story, the researchers disputed Murgatroyd’s assertion that this wouldn’t be possible.]

KZ: If we switch now to the delayed decryption oracle attack the researchers also discovered, regardless of which algorithm you’re using, they say an attacker can not only decrypt communication using this vulnerability but also potentially inject false messages.

BM: That’s right. This is the one they highlighted as being most important, next to the TEA1 attack. But the requirements to do that are considerable. You need to actually provide a false base station which will overpower the proper base station…. How practical it would be [to exploit this?] We don’t know. [The researchers] are able to demonstrate this…. So there is a problem [but] it is one that [we have] fully mitigated [now].

KZ: What do you mean mitigated? Certainly not for the customers who are using the algorithms currently.

BM: Well, no. Quite.… As it stands at the moment, it’s not mitigated.… But the standard has now been amended to include these mitigations.

KZ: So going forward, any manufacturer who builds a product now will look at the new standard and build the product according to the new standard.

BM: Yep.

KZ: So we’re talking three or four years there will be products with mitigation?

BM: I think quicker than that. The [revised] standard [with mitigations] was released in October last year and the [new] algorithms have been available since, I think, March this year. But the migrations should be well underway. All the TETRA manufacturers … have been aware of this ever since [the researchers first disclosed the vulnerabilities to us] in early 2022. [But] implementation of the new standard [actually] started in 2019, two years ahead of the [researchers’ vulnerability] disclosures…. There were three mitigations that were necessary as a result of the researchers’ work, and we’re very grateful for that, because they showed us three vulnerabilities that we weren’t really aware of.

KZ: In addition to the three vulnerabilities they showed you, the researchers also found an anomaly with the TEA3 algorithm — an S-box that looks suspicious to them but they didn’t feel they had the skills to analyze.

BM: In the UK I’ve asked our security authorities whether that constitutes a weakness and luckily it doesn’t. But it is an anomaly, that’s for sure. There’s no doubting that. But it doesn’t actually affect the strength of the algorithm, according to the UK National Cybersecurity Centre.

KZ: I talked with a cryptographer who said that it “absolutely reeks of a deliberate backdoor. The way the S-box is going to work is that over time the key stream will slowly become less diverse and it will do it in a way that’s highly dependent on the initial key. I expect we’ll see a published attack within six months maybe a year that targets this,” he told me.

BM: I asked our security people — cryptographers — and they said it wasn’t a problem. They obviously didn’t go into any more detail than that, but [they didn’t say] it was a deliberate backdoor for sure.

KZ: When you say you asked cryptographers, are you saying you asked your internal TETRA cryptographers if the S-box was a problem?

BM: No I asked UK government cryptographers.

KZ: You asked government cryptographers if the S-box being weakened was a problem and they said “no, absolutely no problem.” Of course they’re going to tell you it’s not a problem.

BM: Well, okay. I can’t second-guess what the motives are that they have behind that.

[After this interview I spoke with Gregor Leander, professor of computer science and a cryptographer who is part of the security research team known as CASA at Ruhr University Bochum in Germany. He examined the S-box and said it’s very poorly done, but as far as he and his team could tell, it’s not exploitable. “In many ciphers, if you used such a box it would break the cipher very badly,“ he told me. “But the way it’s used in TEA3, we couldn’t see that this is exploitable.” He said he’d “be very surprised if it leads to an attack that’s practical.”]

KZ: Let’s talk about the fact that the researchers found vulnerabilities that ETSI wasn’t aware of. They are criticizing ETSI for keeping the algorithms proprietary for a quarter of a century and preventing anyone from analyzing them and finding vulnerabilities during that time. Why does ETSI continue to keep these algorithms secret?

BM: Well, I can tell you the reason why — because the new algorithms we’ve got are also private — and that was at the strong recommendations of governments.

KZ: But is that in the best interest of the public that are using these algorithms?

BM: Well it’s a moot point isn’t it, really. That’s a difficult thing to say “yes it’s to the benefit of the public or not.” There’s no evidence of any attacks on … TETRA that we know of.

KZ: An intelligence agency isn’t going to tell you they’re exploiting it.

BM: Yeah that’s true. Although what you’ve said relates to the algorithm itself, the rest of the protocol is open. So it’s only the algorithms themselves [that are secret]. And the vulnerabilities [the researchers found] — apart from [the backdoor in] TEA1 — were all nothing to do with the algorithms. They were all to do with the protocol within the standard…. That’s why we changed the standard.

KZ: Who’s requiring that the algorithms be kept secret? Who’s making that decision?

BM: I wouldn’t say requiring; it’s a strong recommendation — from governments.

KZ: ETSI is keeping the algorithms secret because the government requested it?

BM: Because some of the algorithms involve critical national security, in terms of public safety.

KZ: But, as the researchers point out, an algorithm should be secure whether or not it’s public. It shouldn’t base its security on being kept secret.

BM: Yeah. I don’t know quite what the answer is. The fact is that these were private, and the fact is the new ones are also private. So that’s the state-of-play at the moment. Whether that changes in the future I don’t know.

KZ: If you’re saying that the only reason they’re secret is because the government has advised it, can ETSI decide on its own to make them public?

BM: I’d have to say yes.

KZ: So why don’t you?

BM: I don’t know.

KZ: Have there been any discussions in ETSI about making them public? Creating an algorithm a quarter of a century ago and keeping it secret might have been the right thing to do in 1995, but is it the right thing to do in 2023?

BM: Well I think that might be the basis of a discussion within ETSI. I’m not sure that anything is going to change that quickly. When we went to the ETSI board to form the new special committee to develop these [new] algorithms, it was explained then that [these algorithms] would be secret on the grounds of national security. And the board accepted that.

KZ: There was no pushback.

BM: Not that I’m aware of.

KZ: Is there any discussion going forward about at least bringing in independent researchers to do full analysis, with the understanding that the findings they uncover can be made public? You could fix the problems they encounter, and after they’ve reviewed your fixes, they can make their findings public, while the algorithms remain secret.

BM: That would have been a great idea with the researchers this time. But they decided to release the algorithms as well [in addition to their findings]. But yeah scrutiny is very important.… I can’t see anything wrong with what you just said, apart from the fact that we’d rather if someone came in to do an independent review of the algorithms … that they wouldn’t then go and just give the information out.

KZ: Yes but that’s the problem that exists right now. If it’s all done quietly and behind the scenes, you can thank them for their work and then tell them to go away.

BM: Yeah that’s true.

KZ: And so there is accountability if they’re allowed to publish their findings and at the same time you have to address the issues they uncover. You can potentially control when they publish their findings. You can say we need six months to fix the vulnerabilities, and then when you prove to them that you fixed them, they can publish their findings.

BM: What you say is very valid.

KZ: Should we trust ETSI algorithms going forward?

BM: I’ve no reason to believe you shouldn’t.

KZ: But the public has a reason not to — the fact that they’re secret.

BM: I can think of all sorts of algorithms that, over time, they become weak. And lots of them have been public ones as well. Sure, algorithms may not have a life of a quarter of a century, that’s for sure…. [But] we have no reason to produce dodgy algorithms, if you like.

This TETRA standard and its implementations has been really at the forefront of critical communications for the last quarter of a century, and it’s been highly successful. And despite these few vulnerabilities that have been discovered, [it] has actually been, in our view, very secure and we’re really proud of that. Although they found vulnerabilities, partially in TEA1, I don’t think it’s a bad record actually.… It doesn’t demonstrate the fact that we had a weak system in the first place.

That was badly worded. I think, generally, TETRA is regarded as secure, strong, reliable and all the things you need for public safety communication systems.

KZ: But no one really knew how secure it was. So it has that reputation through obscurity not through verifiability.

BM: Well [obscurity is] also a way of maintaining security.

Thank you for reading. If you found this article useful or interesting, feel free to share it with others.

Zero Day is a reader-supported publication. You can support my work by becoming a paid subscriber; or if you prefer, you can subscribe for free:

You can also give a gift subscription to someone else: